How to solve the biggest data issues in online real estate

20 April 2021 | 3 min read

In the previous article, we went over the four biggest data struggles that real estate portals are facing today.

These are the biggest struggles that we identified :

● Limited user tracking

● Indirect user preferences

● Poor listing data quality

● Cold start problem

But how exactly should you solve those issues? Let’s have a look:

Analyze data quality

As mentioned in the previous article, an EDA is essential to assess the quality of our user and listings data. This is a process of performing initial investigations on data to discover patterns, spot anomalies, test hypotheses, and check assumptions with summary statistics and graphical representations.

“ 1 in 3 business leaders don’t trust the information they use to make decisions ”

At Co-libry, we use this process to get to know the data of new clients. We look at every field the client has in their database, check for anomalies, and map it into our dedicated template.

The EDA is always the first thing we do when working with a new client. By doing the EDA first, we can identify the data’s potential and fix any data quality issues we encounter in the early stages. Doing this ensures we have everything we need to implement effective AI solutions.

EDA’s fulfill an important internal function for creating AI solutions, saving us time on implementation and troubleshooting later down the road. Despite being an internal process, we share the results with our clients. Why? Because it empowers them to identify problems in their data processing pipelines across their business. It enables them to address issues with a data-driven approach. Which, in most cases, gives them already a quick win for the search engine performance.

In terms of machine learning and AI, the well-known saying: `Garbage in is garbage out` also holds true. If the data used to train your AI models is impure, your results will also be lacking.

We recognize that there is no standard approach to collecting and structuring data. Every client will have a different tech stack and way of managing their database structure. They will have their type of EDA, but following you can find our EDA’s typical glossary.

EDA

Listings data

Firstly, we take a look at the general dataset. How many variables we have, how many observations, how many duplicates, how many variables are barely filled in (+95% NA values), how many variables perfectly filled in,…

In a second step, we make a separation between string variables, categorical variables, numeric variables, and timestamp variables.

String variables are text strings on which arithmetical operations cannot be performed (i.e., a property description). The categorical variable can take on one of a limited and usually fixed number of possible values (i.e., the transaction type of a listing, for-sale, or for-rent). These kinds of variables can be both digits as texts. Numeric variables are attributes or values described using a number (i.e., price of a property). Lastly, timestamp variables are a sequence of characters or encoded information identifying when a particular event occurred, usually giving date and time of day (i.e., time and date when a listing was created).

Every group of variables has a different approach, yet some checks are standard i.e., check how many times filled in correctly. A variable can be filled 100% of the time but have 99% of the values, equivalent to NA.

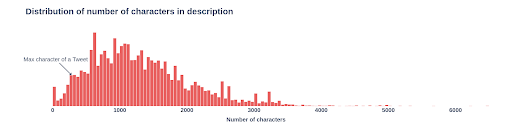

For the string variables, we take a look at the strings’ length, how many words, how many sentences and how many characters. For the description and title fields, we also take a look at which words are frequently used. These insights are useful to determine what kind of words we can extract from the description and title using NLP.

ID fields or unique identifiers are often strings. To prevent rounding errors and conversion errors, we look at how many fields are unique in a certain table or subset. This to check for potential data quality issues.

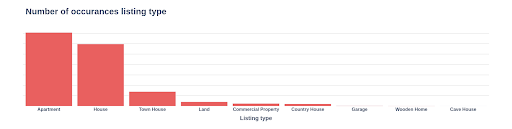

Categorical variables are handled differently. There we take a look at the number of unique values, the most frequent groups, and the frequencies distribution. Another check is whether the categories are exhaustive and mutually exclusive.

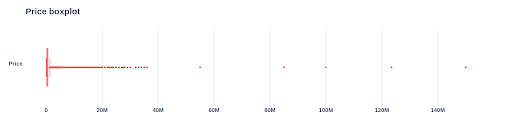

When taking a look at numeric variables, the distribution comes in handy. Looking at the

distribution of a given numeric variable, one can learn great things. I.e., a negative price will

point out that this variable has data quality issues. Longitude and latitude have a range

where they’re in, so when an observation falls outside of this range, the value is unusable.

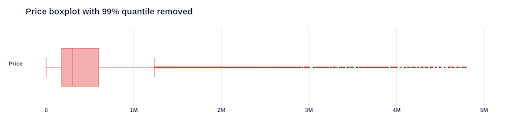

Now the graph above isn’t that readable as there are many outliers. If we remove the top 1% of the observations, we get a more readable graph. That being said, the first boxplot is useful and gives you an excellent indication that there are a certain amount of outliers present.

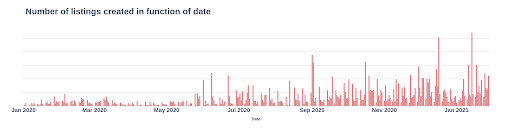

Lastly, the datetime variables, here the minimum and maximum, are already a good starting point. The next step is to look at the different dates of the variables and spot whether a specific date has considerably more or fewer occurrences.

User tracking data

These kinds of datasets are more challenging to analyze uniformly as every portal is

different. But we usually start with some fundamental insights such as number of sessions, number of events, and number of users.

Secondly, we take a look at the timestamp variables. We determine the range, minimum and maximum. Furthermore, as the datetimes of the listings data points, it is to look at the different dates of the variables and spot whether a specific date has considerably more or fewer occurrences. It’s not uncommon to find out that certain dates are missing. This imposes a data quality issue in the historical data.

Last but not least, we take a look at the different events that occur on a portal. In the first phase of this step, we look at picture events, detail pages visited, search events, contact events, and content events. If you’re already able to deduct these, that’s already a big step. This is expanded to portal-specific events and looking at the filled-in search queries in the next phases.

Conclusion

We typically find that the minimum data requirements of fields are mostly there for all clients. However, the differences in data management can present challenges. There are often anomalies in the data that can skew results or give undesirable outcomes.

“ Clients often respond: ‘We’ve never looked at it in this way’, in other words in a data-driven way “

A simple example of this is negative pricing. Negative pricing often happens as a result of human error (typos or a moment of inattentiveness). Other times, you could be dealing with fraud or spam. It’s always useful to spot the causes of these kinds of irregularities and tackle them at their root.

To conclude this article, we’d recommend every company starting with AI to do an EDA at the beginning of your AI projects. But since your database isn’t a fixed object, we suggest doing this on a quarterly or half-year basis.

Check out our computer vision solutions

Check out our computer vision solutions

How House Alerts Help To Obtain Higher Click-Through Rates

Thanks to house alert systems, you can quickly inform potential buyers when new properties that match their criteria show up. Here is a step-by-step guide that will show you how you can retarget potential customers and help them find the house of their dreams.

Two Unique Dashboards That Will Help Your Real Estate Agents Close More Deals

Being successful in sales is about knowing your product inside out and knowing everything there is to know about your customer. Luckily, it’s easier to achieve these two goals today than ever before by using some unique dashboards. Read everything about it here:

How Artificial Intelligence is Shaping the Online Real Estate Market

Now in 2021, the message is clear; AI is here, and it’s here to stay. But what impact does this technology have on the real estate market? Read everything about it in this article